HDS VSP-G and VSP command device setup

Hitachi CCI can be configured to use either LAN to get data from the storage or command device (LUN/Volume).Command device is recomended when you have many of storage to monitor (HDS recommends 40+).

LAN configuration is described in our standart storage access manual.

Document describes installation on Linux RHEL 7.3.

User lpar2rrd instead of stor2rrd user in all below examples if you are on the Xorux Virtual Appliance.

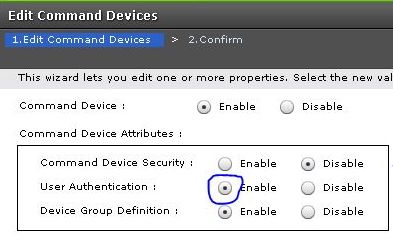

At first make sure that you have this settings on the storage

-

Create volume for command device on the storage (no more than 1 GB)

-

Connect it through 2 ports (it's enough).

-

Execute re-scan FC (or restart/reboot)

# echo "1" > /sys/class/fc_host/host1/issue_lip # echo "1" > /sys/class/fc_host/host2/issue_lip # echo "- - -" > /sys/class/scsi_host/host2/scan # echo "- - -" > /sys/class/scsi_host/host1/scan

-

Check

# ls /dev/sd* | /HORCM/usr/bin/inqraid -CLI -fx DEVICE_FILE PORT SERIAL LDEV CTG H/M/12 SSID R:Group PRODUCT_ID sda - - - - - - - LOGICAL VOLUME sdf CL1-A 87011 900 - - 000B 5:09-01 OPEN-V-CM sdg CL2-A 87012 900 - - 000B 5:09-01 OPEN-V-CM

-

Assure port number are not used

# netstat -an| egrep "11001|11001"

-

create horcm conf files by inserting the stanzas and change:

- port numbers (11001 vrs 11002)

- storage serials (CMD-87011 vrs CMD-87012)

# vi /etc/horcm1.conf HORCM_MON # ip_address service poll(10ms) timeout(10ms) localhost 11001 1000 3000 HORCM_CMD # dev_name dev_name dev_name \\.\CMD-87011:/dev/sd*

Note: we got reported that "\\.\CMD-87011:/dev/sd*" does not work in all cases, use "/dev/sdf" instead in above example

# vi /etc/horcm2.conf HORCM_MON # ip_address service poll(10ms) timeout(10ms) localhost 11002 1000 3000 HORCM_CMD # dev_name dev_name dev_name \\.\CMD-87012:/dev/sd*

Attention: the rights of the stor2rrd to manage these devices must be the same to run HORCM# ls -l /dev/sdf /dev/sdg brw-rw---- 1 stor2rrd disk 8, 80 Jun 14 16:35 sdf brw-rw---- 1 stor2rrd disk 8, 96 Jun 14 16:39 sdg

It seems that it works ... But will not, after reboot, because:

devtmpfs on /dev type devtmpfs (rw,nosuid,size=16358024k,nr_inodes=4089506,mode=755)

To avoid it there must be added user into the group disk:# usermod -G disk stor2rrd

-

Run HORCM:

# su - stor2rrd -c "/HORCM/usr/bin/horcmstart.sh 1 2" # ps -ef| grep horcm stor2rrd 19660912 1 0 Feb 26 - 0:03 horcmd_01 stor2rrd 27590770 1 0 Feb 26 - 0:09 horcmd_02

-

Do not forget to create user on the array management console (with encrypted password).

Make sure that the Perf Monitor on the storage is Enabled

Follow this docu to fully prepare your storage for monitoring.

-

Add service to autostart

# vi /etc/systemd/system/horcmsrv.service [Unit] Description=HORCM Service After=network.target [Service] Type=idle RemainAfterExit=yes User=stor2rrd Group=stor2rrd ExecStart=/HORCM/horcmstart.sh ExecStop=/HORCM/horcmstart.sh [Install] WantedBy=multi-user.target

# vi /HORCM/horcmstart.sh sh /HORCM/usr/bin/horcmstart.sh 1 2

# vi /HORCM/horcmstop.sh sh /HORCM/usr/bin/horcmshutdown.sh 1 2

# chmod +x /HORCM/horcmstart.sh # chmod +x /HORCM/horcstop.sh # chown stor2rrd:stor2rrd /HORCM/horcmstart.sh # chown stor2rrd:stor2rrd /HORCM/horcstop.sh # systemctl enable horcmsrv.service # systemctl daemon-reload # systemctl start horcmsrv # systemctl status horcmsrv horcmsrv.service - HORCM Service Loaded: loaded (/etc/systemd/system/horcmsrv.service; enabled; vendor preset: disabled) Active: active (exited) since Tue 2017-06-20 13:19:03 MSK; 1s ago Process: 14249 ExecStart=/HORCM/horcmstart.sh (code=exited, status=0/SUCCESS) Main PID: 14249 (code=exited, status=0/SUCCESS) CGroup: /system.slice/horcmsrv.service 14255 horcmd_01 14264 horcmd_02 Jun 20 13:19:03 mon-rrd systemd[1]: Started HORCM Service. Jun 20 13:19:03 mon-rrd systemd[1]: Starting HORCM Service... Jun 20 13:19:03 mon-rrd horcmstart.sh[14249]: starting HORCM inst 1 Jun 20 13:19:04 mon-rrd horcmstart.sh[14249]: HORCM inst 1 starts successfully. Jun 20 13:19:04 mon-rrd horcmstart.sh[14249]: starting HORCM inst 2 Jun 20 13:19:04 mon-rrd horcmstart.sh[14249]: HORCM inst 2 starts successfully.If you add next instance HORCM then edit /HORCM/horcmstart.sh and /HORCM/horcmstop.sh