Inspur monitoring

In case of usage of

Virtual Appliance

- Use local account lpar2rrd for hosting of STOR2RRD on the virtual appliance

- Use /home/stor2rrd/stor2rrd as the product home

Prerequisites

-

Allow access from the STOR2RRD host to the Inspur storage on port 22 (ssh).

Test if port is open:

$ perl /home/stor2rrd/stor2rrd/bin/conntest.pl 192.168.1.1 22

Connection to "192.168.1.1" on port "22" is ok

Storage access

There are 2 possibilities how to connect the storage:

- Use user/password

Create new user "stor2rrd" on the storage

-

Storage firmware 8.3.1.2+: use "Monitor" role

$ ssh superuser@<storage control enclosure IP address> "svctask mkuser -name stor2rrd -usergrp "Monitor"

-

Storage firmware 8+ up to 8.3.1.2: use "Restricted Administrator"

$ ssh superuser@<storage control enclosure IP address> "svctask mkuser -name stor2rrd -usergrp RestrictedAdmin"

-

Older firmwares: use "Administrator" role.

It is the lowest role which allows retrieve of statistics data from the storage. Read this for explanation.

$ ssh superuser@<storage control enclosure IP address> "svctask mkuser -name stor2rrd -usergrp Administrator"

- SSH-keys

-

Create SSH-keys on STOR2RRD host under stor2rrd user (lpar2rrd on the XoruX Virtual Appliance) if do not exist yet.

It should already exist on the Virtual Appliance, skip it there.

Type enter instead of passphrase

# su - stor2rrd # (use lpar2rrd user on the Appliance)

$ ls -l ~/.ssh/id_rsa.pub

$ ssh-keygen -t rsa -N ""

Generating public/private rsa key pair.

Enter file in which to save the key (/home/stor2rrd/.ssh/id_rsa):

...

$ ls -l ~/.ssh/id_rsa.pub

-rw-r--r-- 1 stor2rrd stor2rrd 382 Jun 1 12:47 /home/stor2rrd/.ssh/id_rsa.pub

New storage devices might require stronger key, use this one instead of above 'RSA'

$ ssh-keygen -t ecdsa -b 521 -N ""

- Copy ssh-key into the storage under storage superuser

In case of IBM Storwize V7000 Unified use one of "Control Enclosure" IPs (definitely not "File Module" IP).

Detailed description of IBM Storwize V7000 Unified.

$ scp ~/.ssh/id_rsa.pub superuser@<storage control enclosure IP address>:/tmp/123tmp

-

Create new user "stor2rrd" on the storage

-

Storage firmware 8.3.1.2+: use "Monitor" role

$ ssh superuser@<storage control enclosure IP address> "svctask mkuser -name stor2rrd -usergrp Monitor -keyfile /tmp/123tmp"

-

Storage firmware 8+ up to 8.3.1.2: use "Restricted Administrator"

$ ssh superuser@<storage control enclosure IP address> "svctask mkuser -name stor2rrd -usergrp RestrictedAdmin -keyfile /tmp/123tmp"

-

Older firmwares: use "Administrator" role.

It is the lowest role which allows retrieve of statistics data from the storage. Read this for explanation.

$ ssh superuser@<storage control enclosure IP address> "svctask mkuser -name stor2rrd -usergrp Administrator -keyfile /tmp/123tmp"

-

If stor2rrd user already exists on the storage then assign him new ssh-keys:

$ ssh superuser@<storage control enclosure IP address> "svctask chuser -keyfile /tmp/123tmp stor2rrd"

- Check if that works:

$ ssh -i ~/.ssh/id_rsa stor2rrd@<storage control enclosure IP address> "svcinfo lssystem"

id 00000100C0906BAA

name SVC

location local

partnership

bandwidth

...

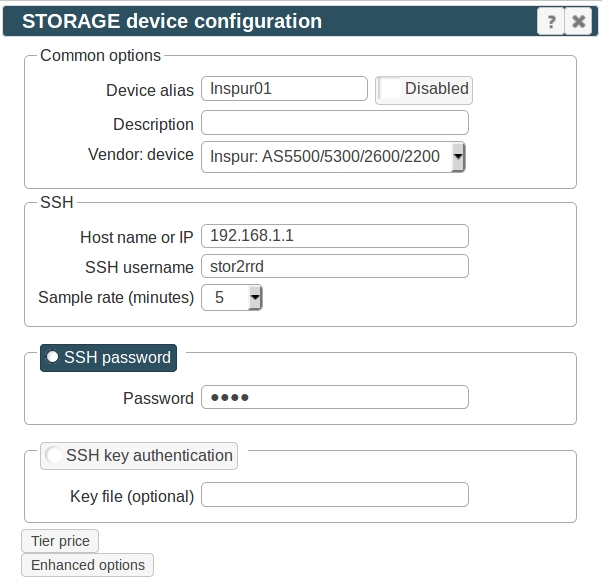

STOR2RRD storage configuration

- All actions below under stor2rrd user (lpar2rrd on Virtual Appliance)

-

Add storage into configuration from the UI:

Settings icon ➡ Storage ➡ New ➡ Vendor:device ➡ Inspur

-

Make sure you have enough of disk space on the filesystem where is STOR2RRD installed

Roughly you might count 2 - 30 GB per a storage (it depends on number of volumes, 30GB for 5000 volumes)

$ df -g /home # AIX

$ df -h /home # Linux

-

Test storage connectivity, either from the UI or from the command line:

$ cd /home/stor2rrd/stor2rrd

$ ./bin/config_check.sh

=========================

STORAGE: storwize01: SWIZ

=========================

ssh -o ConnectTimeout=15 -i /home/stor2rrd/.ssh/id_rsa stor2rrd@192.168.1.1 "lscurrentuser"

connection ok

- Schedule to run storage agent from stor2rrd crontab (lpar2rrd on Virtual Appliance, it might already exist there)

$ crontab -l | grep load_svcperf.sh

$

Add if it does not exist as above

$ crontab -e

# Inspur storage agent (Sames as IBM Storwize/SVC)

0,5,10,15,20,25,30,35,40,45,50,55 * * * * /home/stor2rrd/stor2rrd/load_svcperf.sh > /home/stor2rrd/stor2rrd/load_svcperf.out 2>&1

Assure there is already an entry with the UI creation running once an hour in crontab

$ crontab -e

# STOR2RRD UI (just ONE entry of load.sh must be there)

5 * * * * /home/stor2rrd/stor2rrd/load.sh > /home/stor2rrd/stor2rrd/load.out 2>&1

-

Let run the storage agent for 15 - 20 minutes to get data, then:

$ cd /home/stor2rrd/stor2rrd

$ ./load.sh

- Go to the web UI: http://<your web server>/stor2rrd/

Use Ctrl-F5 to refresh the web browser cache.