Kafka NB server deployment on STOR2RRD appliance

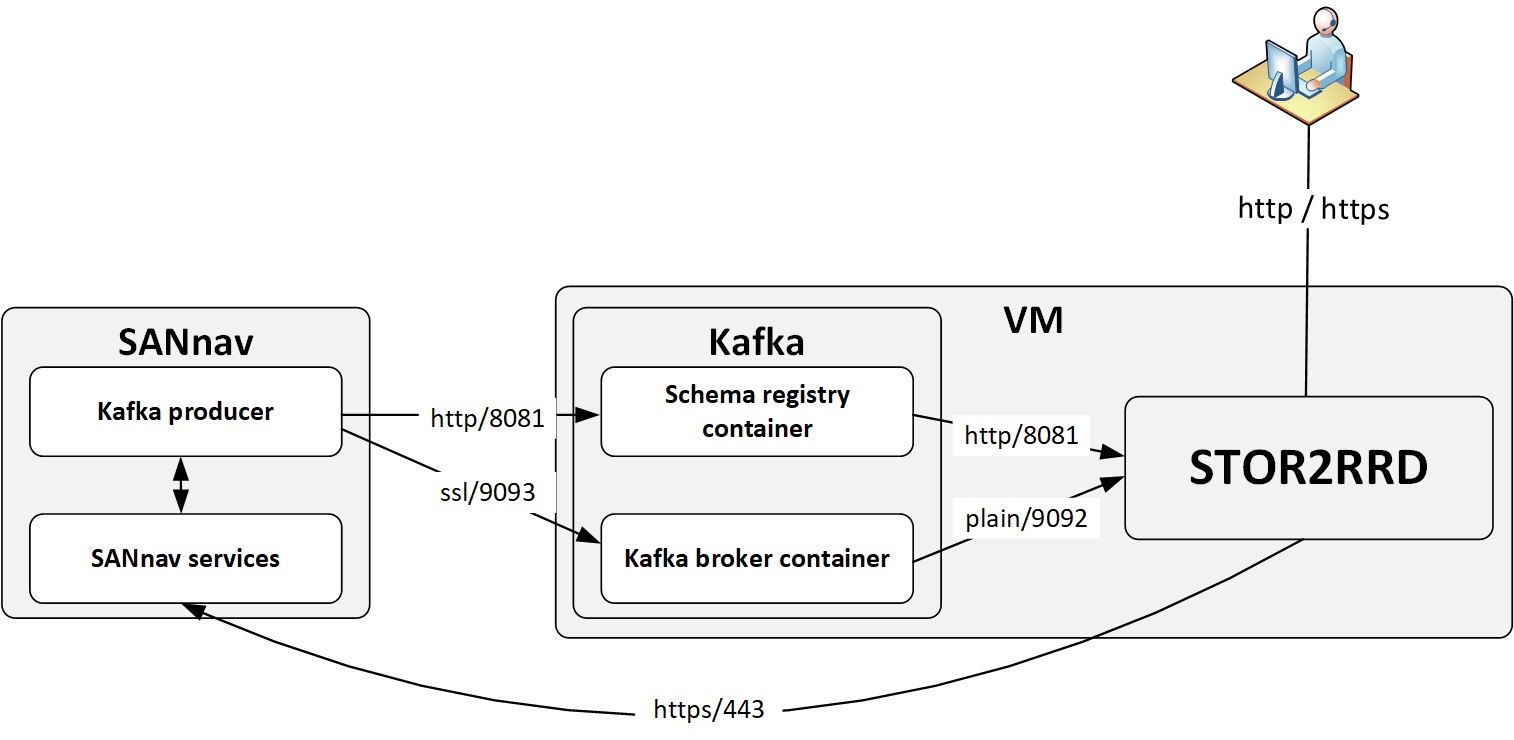

How to deploy a single-node Kafka cluster with schema registry in Docker containers running on STOR2RRD.

|

This procedure is intended for testing SANnav monitoring in STOR2RRD.

The solution described in this guide comes without guarantee or support.

Table of Contents

Deploy Kafka on STOR2RRD host in Docker containers

Deployment overview

- Install required software packages

- Allow stor2rrd to use docker

- Create configuration

- Deploy Kafka NB server

- Register Kafka NB server in SANnav

Install required software packages

- Docker 20.10 or later

- Docker Compose 2.6.0 or later

- jq Command-line JSON processor

- curl

[root@xorux]# dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo [root@xorux]# dnf install docker-ce docker-ce-cli containerd.io jq.x86_64 curl.x86_64 [root@xorux]# systemctl start docker [root@xorux]# systemctl enable docker [root@xorux]# curl -L "https://github.com/docker/compose/releases/download/v2.6.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose [root@xorux]# chmod +x /usr/local/bin/docker-compose [root@xorux]# ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

Allow stor2rrd to use docker

Note: Use 'lpar2rrd' user in Virtual Appliance OVF image.[root@xorux]# usermod -a -G docker stor2rrdLogin as stor2rrd and check docker status:

[root@xorux]# su - stor2rrd [stor2rrd@xorux]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

Create configuration

This procedure will create /home/stor2rrd/stor2rrd/etc/kafkanb direcotry and populate it with necessary configuration files.

You may be asked to enter the path to STOR2RRD directory.

You will have to confirm or enter the local host's IP address.

-

create /home/stor2rrd/stor2rrd/etc/kafka_docker_compose.yml

[stor2rrd@xorux]$ vi /home/stor2rrd/stor2rrd/etc/kafka_docker_compose.yml version: '3.2' services: zookeeper: container_name: kafkanb_zookeeper restart: unless-stopped image: confluentinc/cp-zookeeper:latest ports: - "2181:2181" volumes: - ${KAFKA_RUN}/logs/zookeeper:/var/log/kafka - ${KAFKA_RUN}/data/zookeeper/data:/var/lib/zookeeper/data - ${KAFKA_RUN}/data/zookeeper/txlogs:/var/lib/zookeeper/log environment: ZOOKEEPER_SERVER_ID: 110 ZOOKEEPER_CLIENT_PORT: 2181 ZOOKEEPER_TICK_TIME: 2000 ZOOKEEPER_INIT_LIMIT: 5 ZOOKEEPER_SYNC_LIMIT: 2 ZOOKEEPER_AUTOPURGE_PURGE_INTERVAL: 24 ZOOKEEPER_AUTOPURGE_SNAP_RETAIN_COUNT: 3 user: ${USER_UID} broker: container_name: kafkanb_broker restart: unless-stopped image: confluentinc/cp-kafka:latest depends_on: - zookeeper ports: - '9092:9092' - '9093:9093' volumes: - ${KAFKA_RUN}/logs/kafka:/var/log/kafka - ${KAFKA_RUN}/data/kafka/data:/var/lib/kafka/data - ${KAFKA_RUN}/data/kafka/txlogs:/var/lib/kafka/log - ${KAFKA_RUN}/kafka/certs/keystore:/etc/kafka/secrets environment: KAFKA_BROKER_ID: 110 KAFKA_ZOOKEEPER_CONNECT: 'zookeeper:2181' KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://${KAFKANB_IP}:9092,SSL://${KAFKANB_IP}:9093' KAFKA_SSL_KEY_CREDENTIALS: server_secret KAFKA_SSL_KEYSTORE_CREDENTIALS: server_secret KAFKA_SSL_TRUSTSTORE_CREDENTIALS: server_secret KAFKA_ssl.keystore.type: PKCS12 KAFKA_ssl.truststore.type: PKCS12 KAFKA_SSL_KEYSTORE_FILENAME: kafka.server.keystore.p12 KAFKA_SSL_TRUSTSTORE_FILENAME: kafka.server.truststore.p12 KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1 user: ${USER_UID} schema_registry: container_name: kafkanb_schema_registry restart: unless-stopped image: confluentinc/cp-schema-registry:latest depends_on: - zookeeper - broker ports: - '8081:8081' environment: SCHEMA_REGISTRY_HOST_NAME: schema_registry SCHEMA_REGISTRY_KAFKASTORE_CONNECTION_URL: 'zookeeper:2181' SCHEMA_REGISTRY_KAFKASTORE_BOOTSTRAP_SERVERS: 'PLAINTEXT://${KAFKANB_IP}:9092' SCHEMA_REGISTRY_LISTENERS: http://0.0.0.0:8081 user: ${USER_UID} -

Run kafka_configure.sh script

[stor2rrd@xorux]$ cd /home/stor2rrd/stor2rrd/bin [stor2rrd@xorux]$ ./kafka_configure.sh

Deploy Kafka NB server

This procedure will create /home/stor2rrd/stor2rrd/kafkanb-run direcotry, pull required images from Docker Hub and start Kafka NB containers.

- (optional) Upload necessary docker images manually

- (optional) Configure HTTP proxy for Docker

-

Run kafka_deploy.sh script

[stor2rrd@xorux]$ cd /home/stor2rrd/stor2rrd/bin [stor2rrd@xorux]$ ./kafka_deploy.sh

Register Kafka NB server in SANnav

This procedure will register Kafks NB server in SANnav.

Requires SANnav IP addres and administrator credentials.

It may also require confirmation of the local host's IP address.

-

Run kafka_register.sh script

[stor2rrd@xorux]$ cd /home/stor2rrd/stor2rrd/bin [stor2rrd@xorux]$ ./kafka_register.sh

Management Scripts

The /home/stor2rrd/stor2rrd/bin directory contains several useful scripts for administering the Kafka NB server.-

kafka_deploy.sh

Creates and starts docker containers with Kafka northbound server during initial installation.

Creates /home/stor2rrd/stor2rrd/kafkanb-run directory.

You can use this script to re-deploy Kafka NB server if necessary. -

kafka_register.sh

Registers Kafka NB server to SANnav.

Requires SANnav IP addres and administrator credentials.

It may also require confirmation of the local host's IP address. -

kafka_listnb.sh

Lists northbound servers registered to SANnav.

-

kafka_stop.sh

Stops Kafka NB containers without removing them.

-

kafka_start.sh

Starts previously stopped Kafka NB containers.

-

kafka_unregister.sh

Removes previously registered Kafka NB server from SANnav.

-

kafka_delete.sh

Force stops and deletes Kafka NB containers.

Removes /home/stor2rrd/stor2rrd/kafkanb-run directory.

Basic Troubleshooting

-

Check log the file: /home/stor2rrd/stor2rrd/logs/kafkanb.log

-

Check Kafka NB containers' status

All three containers must be Up

[stor2rrd@xorux]$ docker container ls -a -f name=kafkanb CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 028de739d3c7 confluentinc/cp-schema-registry:5.2.2 "/etc/confluent/dock…" 2 days ago Up 2 days 0.0.0.0:8081->8081/tcp kafkanb_schema_registry d62c791b360d confluentinc/cp-kafka:5.2.2 "/etc/confluent/dock…" 2 days ago Up 2 days 0.0.0.0:9092-9093->9092-9093/tcp kafkanb_broker 721e43c00d91 confluentinc/cp-zookeeper:5.2.2 "/etc/confluent/dock…" 2 days ago Up 2 days 2888/tcp, 0.0.0.0:2181->2181/tcp, 3888/tcp kafkanb_zookeeper

-

List northbound servers registered in SANnav

-

kafkaClusterUrl and schemaRegistryUrl must match STOR2RRD host's IP address

-

FC Port and Switch streams must be enabled: streamState: 1

[stor2rrd@xorux]$ /home/stor2rrd/stor2rrd/bin/kafka_listnb.sh # SANnav IP address, user and password Make sure user SANnav user has a priviledge to register and manage Northbound servers SANnav IP address [192.168.0.10]: SANnav username [Administrator]: SANnav password: [ { "name": "kafka4stor", "kafkaClusterUrl": "192.168.0.24:9093", "schemaRegistryUrl": "http://192.168.0.24:8081", "caPublicCertificate": "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURMVENDQWhXZ0F3SUJBZ0lVYmxJa0Z5R3pnWTRUelNJZTVvU2tTZTA3b1Rzd0RRWUpLb1pJaHZjTkFRRUwKQlFBd0pqRU9NQXdHQTFVRUNnd0ZXRzl5ZFhneEZEQVNCZ05WQkFNTUMxaHZjblY0SUV0aFptdGhNQjRYRFRJeQpNRFl5TWpFME1EWTFORm9YRFRNeU1EWXhPVEUwTURZMU5Gb3dKakVPTUF3R0ExVUVDZ3dGV0c5eWRYZ3hGREFTCkJnTlZCQU1NQzFodmNuVjRJRXRoWm10aE1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0MKQVFFQW96cHl1WmlCcjdFK3NMeDgxWkcvdXZ1V2M2eHlsbXY4WE1ZVlgvSnlkU2Y4TE82czlkSkd0WTBBVXZOYwpuK3V6VFZITnNZbDhwczN1eW1sVDF6MXJzY3JYQXlhRDBMTmtKZFRTVG02SEpCT2czSzE5Yk1hMXBFUVd1b0k1CkFBTHFtK1ZDWnRodXZGVkdLZWI0MncxR2NlNlhsVTdWWEpkcGx2eFdRUDFmdjN6QVF6ckRQS0RTcStQMUhablYKZ2l4T2V1S1FkMFFIS3NQb1lSeStoS1Iyc2NjUis3a0xYTXJtRFIvMnA3OWlZR2U4SzN1US9INmc3MTNGakdlSgpNWi9ocHZoWHFxL2J2cjhlQm92VUdRSjRJOXRGSHQyWG9SWVpHaWVjU25GdE1rOFNCZUV6am52SzRFTTlQcm1zClBHM0RRMTdtOUZQclpKMkYvYk1tcERpQ2h3SURBUUFCbzFNd1VUQWRCZ05WSFE0RUZnUVU2QmhYUXdqbGdjZ28KWmhGeHlSMnpoM3pLczlBd0h3WURWUjBqQkJnd0ZvQVU2QmhYUXdqbGdjZ29aaEZ4eVIyemgzektzOUF3RHdZRApWUjBUQVFIL0JBVXdBd0VCL3pBTkJna3Foa2lHOXcwQkFRc0ZBQU9DQVFFQW5UbkVuVk81RUljTWNlaitiMkhNCkFLVGxmd3c3bXBLc2N0QTN0SXNnOEFuZnVFSTZaaFBueTFKdGkwVC9abHY2clJnanBnaUF3TmV4bFdwK25vUVcKUjQybm92aDRNanZqTFBrU1d1OHdlR0w3SUM3aFhiTlYrYVhpWjZUNFAydGFDVk1UNyQ0R6djY4SUo4NkRGZApkMWJW42NUNRVGtEWFdiZW9PZTempXcUhVbnM0TDIrY2VJMDZDFndkdlMTRoWlMkR3OFdZemJpWHpxCm9TlYTmRMTTlFYkgwV2aXNCVllTUnc1M1RJMmZ0dOVlZBYmZxYnNFdnNE55b0daNldsaEx0ZraVgKQzRIMEhiVaZHFyNW13MzV6YUwxdFQld6TFlONWNQbkFQNEUDJ4ZUpyNmV2TmFaa3ZmVnVyRjJ5F2MworUT09Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0=", "id": 18, "connectionState": 0, "connectionStateReason": "SANnav successfully connected to Northbound Server.", "streamDetails": [ { "streamType": 1, "streamName": "FC Port", "streamState": 1 }, { "streamType": 2, "streamName": "Eth/GigE Port", "streamState": 0 }, { "streamType": 3, "streamName": "Extension Tunnel/Circuit", "streamState": 0 }, { "streamType": 4, "streamName": "Switch", "streamState": 1 }, { "streamType": 5, "streamName": "Flow", "streamState": 0 } ] } ] -